The Past, Present and Future of Augmented Reality

READ WHOLE ARTICLE

With augmented reality experiencing quite a boom, let’s dive into its history, sort out what it is and how it works, how it could be used and what is augmented reality meaning for people and business and look out for its future.

What it is

Let’s start in the natural order with the augmented reality definition. Definition, courtesy of Wikipedia: “Augmented reality (AR) is a live direct or indirect view of a physical, real-world environment whose elements are augmented (or supplemented) by computer-generated sensory input including sound, video, graphics or GPS data.” AR blends digital components into the view of the real world to enhance the perception or/and add useful and entertaining information. That could be done in both Field of View (FOV) and screen overlay. It is a kind of computer mediated reality with computer using sensor arrays, tracking, visual odometry, object detection and image processing algorithms to create data rich overlay easily told apart from the real world. |  |

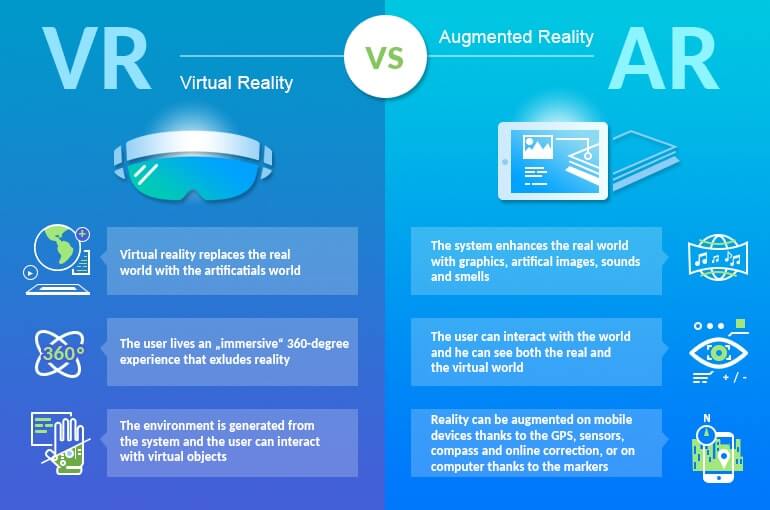

Augmented, not virtual

Augmented reality is broadly in words confused with Virtual reality. The core difference between them is the result user experience. VR results in a fully simulated environment with the user intentionally isolated from the real world, whereas the AR is all about interacting with the real world. AR enhances real-world perception, VR substitutes it.

How It Works

What are the essentials of AR system’s work?AR’s basic idea is to take the picture of the real world, process it, process an auxiliary data stream defined by the specific application, create an overlay containing wanted data in supposed places, combine it with the initial image and show it to the user. And all that should be done in a fast and seamless way, with low latency, especially on FOV systems. A combination of sophisticated software and cutting-edge hardware is needed to achieve the proper effect.

There are four general parts of an AR system, in natural order:

- sensor

- input devices

- processor

- display

Sensors and input

Sensor arrays provide the aforementioned auxiliary data stream. That could include GPS, accelerometers, solid state compasses, gyro-sensors, digital cameras, optical sensors, RFIDs, MST, magnetometers, proximity sensors, wireless sensors and countless others. All that mostly for getting the position of the user, of his/her devices, head and hands. Tracking is the main goal here.

Input devices are the means of making the experience interactive, allowing the user to use the interface. This can start from pretty much conventional trackballs, styluses, wands or any other pointers, through exo-gloves and other body-wear with embedded sensors up to advanced gesture and speech recognition systems.

Processing, augmenting, mediating

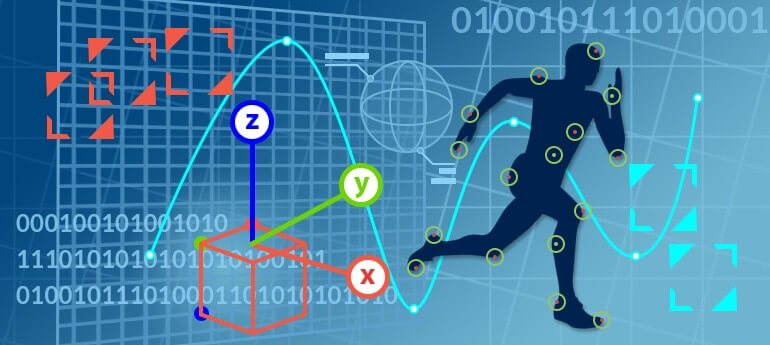

Processor is the software/hardware platform that creates the mediated reality. This is the place in the AR system where all the scary math stuff occurs. The goal is retrieving real-world coordinates from received images by implementing computer vision techniques. Most of those coming from the field entitled visual odometry. As you might known, there is a planet in our Solar System fully inhabited by robots? Those rovers of Mars exploration program benefit a lot of visual odometry systems – means allowing to determine both position and orientation of the robots by processing imagery, provided by their cameras. While usual meaning of odometry supposes estimation based on rotary encoders, this is not applicable to rough terrains and/or non-wheeled vehicles. Tracking humanoid movement and positioning is quite a suitable problem.

Various methods and algorithms are in use, that are applied in two consequential stages.

During the first stage detection of interest points is on the agenda. They are: clearly, preferably mathematically, defined points of the input image with well-defined position in image space. They are required to stay stable under both local and global image deviations like illumination or brightness variations. That enables interest points detection to be algorithmic, with reliable computation with high repeatability rates.

Naturaly interest points are defined by specifics of the AR system, also fiducial markers might be involved. A variety of feature detection methods like edge, corner and blob detection or thresholding. Other image processing methods might also be of use.

The second stage is dedicated to retrieving the real-world coordinate system from the data processed during the first stage. Fiducial markers or objects with known geometry presence allows pre calculations of scene’s 3D structure, excessively simplifying the job. SLAM methods are frequently used for mapping of relative positions, when parts of scene aren’t known. When there is no available information about scene geometry bundle adjustment and other methods aimed for reconstruction of three-dimensional structures from two-dimensional image sequences are used. This family of methods is known as Structure from motion technique.

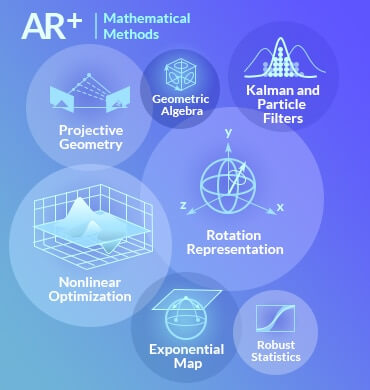

| Here are the names of some of the mathematical methods used during the second stage for additional reading, perhaps. They include:

All this requires serious and careful programming and is extremely thirsty for computing power. |

A dedicated organization named Open Geospatial Consortium has developed and now maintains a data standard labeled Augmented Reality Markup Language(ARML), incorporating XML grammar and ECMAScript bindings for virtual objects’ properties dynamic access and description of location and appearance of those objects in the AR scene.

Shopify Augmented Reality Gets Closer To Business Solutions

Augmented reality devices

And now the most geeky and visible part – the display.

There are four main types of AR displays:

- Optical projection systems – these mostly are HUDs and rare at the present time spatial systems.

- Conventional monitors – good old tech, proven, reliable and easy to work with.

- Hand held devices – a super trendy platform for AR, responsible for the current boom. A very potent, obliged to say, due to fairly serious computing capability of modern smartphones and tablets, good screens and wide built-in sensor arrays. Ubiquitous nature of modern handheld smart gadgets only helps.

- Display systems worn on the human body – oh! Here’s the geeky stuff.

Let’s look a bit closer on some of those worn display systems.

Eyeglasses – eye-wear with cameras intercepting the real world view that project AR imagery through or reflect from the surfaces of the eyepieces. Google Glass may play a good example of augmented reality glasses. Head mounted displays or HMD – Like the award winning Meta 2 and the famous Microsoft HoloLens(often referred to as “smartglasses”) are head-worn display devices, attached to a harness or as part of a helmet. They have small displays in front of user’s eyes or use combiner lens. They also carry a number of built-in sensors typically allowing a six degrees of freedom monitoring and highly advanced sound systems. Audacious futuristic augmented reality contact lenses are in active development. The first contact lens displays had been presented in 1999, with much progress since then. |  |

The University of Washington, to be more precise, Human Interface Technology Laboratory placed there, wages a project developing the VRD, the virtual retinal display, feasibly the penultimate step before direct projecting data to the brain. The VRD is showing the user an illusion of a screen, quite conventional in looks, but floating in front of user’s eyes, by projecting images directly to user’s retina.

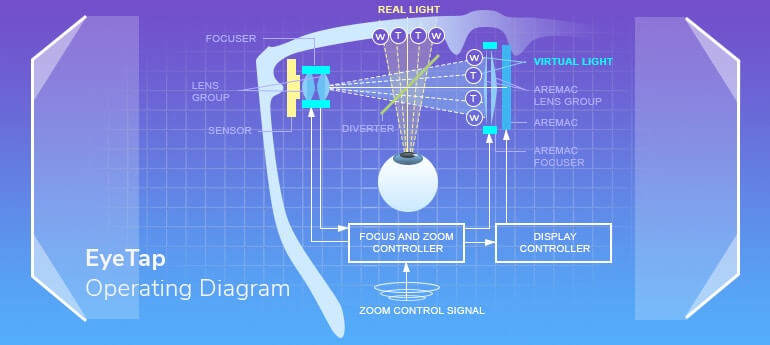

Oh and the EyeTap. Black magic, lasers and Steve Mann (yes, the Chief Scientist at Meta). This augmented reality headset captures the light incoming to the user’s eye, processes it, augments and substitutes real light rays with synthesized laser beams. See the principal schematics below:

The history

AR’s history isn’t that brief as one might think. With BAE Systems responsible for development of the first HUD in service in 1958 intended for the Blackburn Buccaneer attack jet and having a 25 year long lifespan; and GM bringing the first HUD into a production car, the ‘88 Oldsmobile Cutlass Supreme, to bringing HUDs to the most commercial passenger aircrafts from SAAB 2000 and Bombardier CRJ to whole AirBus and Boeing lineups. The HUDs had been the only widespread commercial AR product for a long time, albeit HMD was invented in 1968 by a Nebraskan famous Internet pioneer Ivan Edward Sutherland. In 1980 Steve Mann, yes, him again, invented the first wearable computer vision system with text and graphical overlays over a photographically mediated reality. This was ,doubtless, the birth of the Augmediated Reality notion. Lots of research have been done through 80s, 90s and 2000s, from military projects like BARS and great work by Mike Abernathy in identifying space debris by satellite geographic trajectories overlaying on live telescope video to brilliant navigation projects and such a familiar weather forecast studio view, that brought AR to TV in 1981 by the hands of Dan Reitan.

But the real rise of AR came to consumer market in 2008 with Wikitude launching their AR Travel Guide on HTC Dream, the first commercially available smartphone bearing Android OS. Since then AR has been jumping high up to the revolutionary Niantic megahits Ingress and Pokemon Go, those being released on both Android and iOS brought AR not only to mainstream consumer market but to the news headlines.

The history of AR and some knowledge of its design gives us the answer why it is rocketing now. With deafening sonic boom of Pokemon Go popularity growth heard in every part of the planet, we can see that answer is availability and enhancement through technology. All the needed hardware became affordable, the IT industry became vast and highly developed, providing necessary software expertise, and capable devices, basic ones as a minimum, are almost in every pocket. And don’t forget the easily accessible broadband internet connection.

Augmented reality apps

Now let’s look at the use of AR and some augmented reality examples.

|  |

There is also a great app Star Walk – an astronomical AR app, showing augmented data on constellations and planets when pointed to the sky.

|

|

- Augmented reality translation – just look at the Google translate mobile app. It incorporates the World Lens app by Quest Visual, acquired by Google back in 2014. It can translate signs and printed text in 27 languages in real-time video. It also capable of translating 37 languages in photo mode and 32 languages in conversation mode. We haven’t ever been so close to Star Trek universal translator.

- Augmented reality marketing – Novelty and socialization along with personalization and accessibility are named as possible AR marketing advantages.

- Augmented reality games – one of the most obvious fields with Geocaching (a tourism oriented game with almost 3 million players around the Globe), Ingress and Pokemon Go storming around, AR gaming has a bright future with no doubts. New AR platforms like Microsoft HoloLens bring terrific possibilities for game developers.

- Augmented reality apps for education – overlaying of 360 degree models helps so much in visualizing of educational material, that it is hard to overestimate. Engineering, CAD, Architecture and even Anatomy. Training on a dummy while wearing an EyeTap is such a valuable experience, isn’t it?

- Augmented TV – companies like Sportvision provide broadcast enhancements for the sake of viewers’ comfort, virtual flags in NASCAR, for instance.

| There also were some interesting projects discontinued after being acquired by bigger companies like Junaio–world’s first augmented reality browser, acquired by Apple with its parent Metaio Gmbh (Munich, Germany) in 2015, the project was closed ever since, but who could guess, especially with the news of Apple’s interest in investing to AR, inspired by stunning Pokemon Go success. 2011 project named String, animating printable cards on the screen of an iPad, overlaying them on iPad camera live stream. Urbanspoon a great restaurant information and recommendation service founded in 2006 and now integrated into its new (since 2015) parent – India-based Zomato. |  |

Future of Augmented Reality

With serious players involved into AR development (some startups and some quite established), to add to aforementioned – brilliant AR SDK developers like Dutch company Layar or the famous Wikitude.

Consumer augmented reality trends considerably differ from augmented reality military projects. With civil market demanding entertainment, augmented reality games, augmented reality retail and augmented reality e-commerce enhancements whereas the military are aimed to special navigational features, aiming, fast and silent communications and tactical data provision.

Augmented reality medical projects supposedly would primarily be for educational purposes, due to the novelty of the tech, but augmented reality surgery is a question of not so far future. It may become hard to find the reasonable degree to which surgeons should rely on AR tech, but it is obvious that AR can help a lot.

Augmented reality driving is already here with ambitious BMW Mini AR Driving Smartglasses. And also BMW has a nice project (a bit like AR surgery, but on cars) of and AR assistant for auto mechanics.

The only thing we could wish is some unification, not many of us would like to have a separate pair of smartglasses for each and every daytime activity, and a special HMD for dinner, perchance.

There are some concerns nevertheless

Both AR and VR are quite new to our world. They both are capable of serious influence and impacts on the world as we know it. Social, economical, educational, juridical, military and medical changes are coming and it is yet to be known what they will be. New forms of art, new fashion, drastic philosophical and lifestyle changes are at the gates. There is a number of technological barriers still to overcome, but human science is quite potent in this discipline, so it might be worth to consider all the mentioned changes before the radical technological breakthrough. We do need to avoid ill treatment of the matter. Considering immense simultaneous advances in computing power, machine learning, object recognition, AI and ubiquitous computing adding AR and VR technologies to the cocktail may become a step to a new evolutionary level, some sort of bio-digital hybridization. Appears to be a near future event. And doesn’t seem so necessarily bad, even sounds promising for the sake of communication capabilities and possible lifespan prolongation, but with poor handling it might also turn out for you and your friends finding yourselves, all of a sudden, repeating: “We are the Borg. You will be assimilated. Resistance is futile!”

If you are interested in action game development and design, Multi-Programming Solutions suggests to visit our portfolio and evaluate the range of opportunities that we offer our customers.

36 Kings Road

CM1 4HP Chelmsford

England